Tuesday, October 20, 2009

I've Moved

I moved my blog/site to www.jpmcauley.com this week. Moving forward, all updates will be posted there.

Sunday, August 9, 2009

Cloud Is Stable: Let's Fix Some Design Flaws

We've had a full production cloud environment for a couple of months now and everything is running stable. The networking portion of this offering has provided everything we've hoped for: rapid provisioning, centralized management, and lower cost per customer environment.

We've got roughly 15 enterprise customers on the cloud now and I think the largest challenge we've faced is helping customers understand this new technology and how it changes things for them. For us, it's a really great thing to be able to get an order in for a new environment and then have that environment completely built within a few hours after we speak with the customer for an understanding of exactly what they want. Provisioning a new cluster of firewalls literally takes less than an hour now from start to finish including building the security policies.

The one thing that we've run into that seems to be the biggest challenge resides in the F5 shared load balancing arena. We knew this would present some challenges up front so it wasn't a surprise but we're now really trying to put some focus around it. The problem is that because of where the F5 cluster sits and how we're doing a one-armed load balancing configuration, we can't offer customers the SSL offload capabilities. Even if we could, F5 has no way to limit resources on a per-customer basis. We were promised that we would have this capability with v10 of their software but when it was released, we were disappointed because the features still weren't there.

Here is a summary of the resource allocation issue. The F5 load balancers are very powerful appliances. They can handle lots of requests and are probably the best load balancer on the market. For the cloud offering, these load balancers have to be shared among many different customers. With the way a customer's cloud environment can be carved up and depending on many different factors around the customer's application, IP traffic can look very different from one customer to another. When we designed this solution, we estimated how many customers we would be able to provision in this virtual environment. If a few customers have huge traffic loads or their apps require some kind of special handling for their packets, you could end up chewing up a lot more resources on the F5 cluster than the average customer would. This risk could significantly affect how many customers we actually are able to provision on parts of the cloud infrastructure which of course would reduce overall profits for the offering.

Cisco is not known for their load balancing products but they do seem to have an offering that holds a lot of promise. The ACE load balancer has a virtualization feature in it where we can actually provision customer load balancers in a virtual state off one physical cluster. Resources can be dedicated to a particular customer and they can have guarantees for those resources. For example, we could say that customer X gets 500 transactions per second of SSL handling, can use 10% of the load balancer's CPU, and can have up to 50,000 concurrent sessions running at any one time. This helps us with predictability and cost modeling because we can now know that each customer we provision in that environment will have the resources they were promised when they signed the contract.

We used our lab cloud environment this week to build two different customer cloud environments so that we could begin testing with the ACE this week. We think it will do what we want and now we just have to prove it out. I'll post more next week after we've completed the testing.

We've got roughly 15 enterprise customers on the cloud now and I think the largest challenge we've faced is helping customers understand this new technology and how it changes things for them. For us, it's a really great thing to be able to get an order in for a new environment and then have that environment completely built within a few hours after we speak with the customer for an understanding of exactly what they want. Provisioning a new cluster of firewalls literally takes less than an hour now from start to finish including building the security policies.

The one thing that we've run into that seems to be the biggest challenge resides in the F5 shared load balancing arena. We knew this would present some challenges up front so it wasn't a surprise but we're now really trying to put some focus around it. The problem is that because of where the F5 cluster sits and how we're doing a one-armed load balancing configuration, we can't offer customers the SSL offload capabilities. Even if we could, F5 has no way to limit resources on a per-customer basis. We were promised that we would have this capability with v10 of their software but when it was released, we were disappointed because the features still weren't there.

Here is a summary of the resource allocation issue. The F5 load balancers are very powerful appliances. They can handle lots of requests and are probably the best load balancer on the market. For the cloud offering, these load balancers have to be shared among many different customers. With the way a customer's cloud environment can be carved up and depending on many different factors around the customer's application, IP traffic can look very different from one customer to another. When we designed this solution, we estimated how many customers we would be able to provision in this virtual environment. If a few customers have huge traffic loads or their apps require some kind of special handling for their packets, you could end up chewing up a lot more resources on the F5 cluster than the average customer would. This risk could significantly affect how many customers we actually are able to provision on parts of the cloud infrastructure which of course would reduce overall profits for the offering.

Cisco is not known for their load balancing products but they do seem to have an offering that holds a lot of promise. The ACE load balancer has a virtualization feature in it where we can actually provision customer load balancers in a virtual state off one physical cluster. Resources can be dedicated to a particular customer and they can have guarantees for those resources. For example, we could say that customer X gets 500 transactions per second of SSL handling, can use 10% of the load balancer's CPU, and can have up to 50,000 concurrent sessions running at any one time. This helps us with predictability and cost modeling because we can now know that each customer we provision in that environment will have the resources they were promised when they signed the contract.

We used our lab cloud environment this week to build two different customer cloud environments so that we could begin testing with the ACE this week. We think it will do what we want and now we just have to prove it out. I'll post more next week after we've completed the testing.

Saturday, June 20, 2009

Nexus 2148 Blocks BPDUs

We ran into a small issue this week with the cloud. We can easily overcome it but it seems that Cisco has a few design flaws to work out with the Nexus 2148, or fabric extenders which they refer to them as.

As you know from previous posts, we've launched our cloud offering and we've had very good public response from it. We've got about a dozen customers provisioned on it now and the requirement has emerged (we knew it would) to connect a customer cloud environment to their dedicated environment. In this particular case, Oracle RAC isn't supported on VMWare (go figure). We have a customer that wants to provision all of their front end web servers in the cloud but use an Oracle RAC implementation in the back end. So basically what we need to do is to build a RAC environment in a dedicated space for the customer and have it connect to the customer's cloud infrastructure.

Initially, we were just going to have the customer's RAC environment sit in a dedicated rack somewhere in the data center. We were going to run a couple of cross connects to the cage where our cloud infrastructure sits and then connnect it into the Cisco Nexus 2148 fabric extenders since those provide 48 gig copper ports. In the dedicated environment, the customer was going to have a couple of switches for their RAC servers and we'd just connect those switches to the 2148s.....all would be good.

Then we found the problem. The 2148 blocks BPDU (bridge protocol data unit) packets so we can't connect another switch to them. It will send the port into err-disable. The problem is that BPDUs are blocked by default and you can't change this setting. Rats!!! I'm sure Cisco has some great rational explanation for why they did it (like they're trying to get rid of spanning tree or something) but I think it's so you have to take the connection up to the Nexus 5020 or 7000 series and burn your 10G SFP slots. More 1oG ports get burned...Cisco makes more money. I know, I know.....I may be being harsh on Cisco and somewhat understand their rationale but it makes life a little more difficult.

We ultimately decided to just provision a couple of ToR switches (Cisco 3750 or 4948) to service this need. No big deal but it would have been nice to be able to use the ports on those 2148s as we have a lot that aren't going to be used for other purposes.

Note that we did verify you can't disable this feature. Documentation is hard to find to support this but we fully tested it in the cloud lab so if you're out there racking your brain trying to figure this out, give it up for now.

As you know from previous posts, we've launched our cloud offering and we've had very good public response from it. We've got about a dozen customers provisioned on it now and the requirement has emerged (we knew it would) to connect a customer cloud environment to their dedicated environment. In this particular case, Oracle RAC isn't supported on VMWare (go figure). We have a customer that wants to provision all of their front end web servers in the cloud but use an Oracle RAC implementation in the back end. So basically what we need to do is to build a RAC environment in a dedicated space for the customer and have it connect to the customer's cloud infrastructure.

Initially, we were just going to have the customer's RAC environment sit in a dedicated rack somewhere in the data center. We were going to run a couple of cross connects to the cage where our cloud infrastructure sits and then connnect it into the Cisco Nexus 2148 fabric extenders since those provide 48 gig copper ports. In the dedicated environment, the customer was going to have a couple of switches for their RAC servers and we'd just connect those switches to the 2148s.....all would be good.

Then we found the problem. The 2148 blocks BPDU (bridge protocol data unit) packets so we can't connect another switch to them. It will send the port into err-disable. The problem is that BPDUs are blocked by default and you can't change this setting. Rats!!! I'm sure Cisco has some great rational explanation for why they did it (like they're trying to get rid of spanning tree or something) but I think it's so you have to take the connection up to the Nexus 5020 or 7000 series and burn your 10G SFP slots. More 1oG ports get burned...Cisco makes more money. I know, I know.....I may be being harsh on Cisco and somewhat understand their rationale but it makes life a little more difficult.

We ultimately decided to just provision a couple of ToR switches (Cisco 3750 or 4948) to service this need. No big deal but it would have been nice to be able to use the ports on those 2148s as we have a lot that aren't going to be used for other purposes.

Note that we did verify you can't disable this feature. Documentation is hard to find to support this but we fully tested it in the cloud lab so if you're out there racking your brain trying to figure this out, give it up for now.

Friday, May 29, 2009

We're Launching The Cloud!

After many months of work, we're finally ready to launch our cloud computing offering. The cloud is built and we completed failover testing yesterday. We're also holding a special open house event/launch party for this new offering and we've already got customers wanting to sign up for the service. It's really picking up steam around here and we're all excited.

We ultimately ended up going with the following networking components for the cloud:

Virtualized Firewall Platform - Juniper ISG 2000s, creating a VSYS for each customer

Switching Platform - Cisco Nexus 5000 and Cisco Nexus 2000 Series Fabric Extenders

Virtual/Shared Load Balancer - F5 BigIP LTM & GTM for global traffic management

I think the most exciting piece of this offering is our ability to rapidly deploy new customer environments. We're promising customers that we can deliver their entire environment from the firewalls to the load balancers to the virtual machines to the storage....all within 5 days from order submission. In the past, we were shooting for 30 days in a typical dedicated environment where the customers had dedicated hardware that had to be purchased and provisioned before we turned it over to them.

From a business standpoint, this is big. If an e-commerce site suddenly experiences increased demand, they can request more virtual machines be added to their application server pools and within 4 hours they'll have them. Anyone that has worked in big dedicated environments can tell you that having that ability significantly increases your ability to add capacity on the fly. It eases the burden in many areas.

Look for the official press releases next week. I just wanted to report that we have completed the build and are in the final stages for launch. After the launch, I plan to provide a few helpful details around the integration of the server/VM environment with the Cisco Nexus switching platform. We learned a few lessons there for sure.

We ultimately ended up going with the following networking components for the cloud:

Virtualized Firewall Platform - Juniper ISG 2000s, creating a VSYS for each customer

Switching Platform - Cisco Nexus 5000 and Cisco Nexus 2000 Series Fabric Extenders

Virtual/Shared Load Balancer - F5 BigIP LTM & GTM for global traffic management

I think the most exciting piece of this offering is our ability to rapidly deploy new customer environments. We're promising customers that we can deliver their entire environment from the firewalls to the load balancers to the virtual machines to the storage....all within 5 days from order submission. In the past, we were shooting for 30 days in a typical dedicated environment where the customers had dedicated hardware that had to be purchased and provisioned before we turned it over to them.

From a business standpoint, this is big. If an e-commerce site suddenly experiences increased demand, they can request more virtual machines be added to their application server pools and within 4 hours they'll have them. Anyone that has worked in big dedicated environments can tell you that having that ability significantly increases your ability to add capacity on the fly. It eases the burden in many areas.

Look for the official press releases next week. I just wanted to report that we have completed the build and are in the final stages for launch. After the launch, I plan to provide a few helpful details around the integration of the server/VM environment with the Cisco Nexus switching platform. We learned a few lessons there for sure.

Thursday, May 7, 2009

On Another Note: Rain Water Collection Systems

I know this is supposed to be about data center and network virtualization but I'm going to take a brief break on one posting to discuss rain water collection. The main reason is because I couldn't find much information out there for cost analysis and return on investment potential and I'm sure others could benefit from some real data.

To set the stage, I live in the Raleigh, NC area and I have a ten zone irrigation system for my lawn. The water bills rise from an average of $45 per month to an average of about $200 per month during the summer months when I'm having to irrigate two to three times per week. Because of the amount of money we're talking about, I decided to look into rain water collection systems that could be tied into my irrigation and help offset the water costs each month.

After investigating lots of options, I called up a local company called FreeFlo Water Recycling Systems. They had a system that included underground storage tanks, filtration systems, pumps that could generate about 24 gallons per minute, and optional tie-ins to my home water to service toilets, the dishwasher and the washing machine. It sounded great from the beginning and when they came to discuss my needs, they were very knowledgeable and had lots of ideas for what I would need. They left with all the information and said I would be getting a quote in a couple of days.

The day following that, I had my landscaping guy come over to discuss a couple of other projects I was planning. I shared with him what I was looking into and he asked me some questions about the system. When I told him that we were upgrading the basic package and installing two 1700 gallon tanks, he asked if I had an idea about how much water we used with each irrigation cycle. I had no idea so we worked a few minutes on some numbers to figure it out. With my ten zones and the various types of heads, we calculated roughly between 2200 and 2500 gallons of water each time I irrigated. The wheels started to turn.

The next step was to figure out how much I could possibly save in a given year. The guy from FreeFlo suggested I could cut down my water bill from about $200 during the summer months to about $35-$40 per month. He said he had some customers that had cut their bills from over $300 per month to less than $50 per month.

I started out by figuring how much collection surface I would have. I figured I probably have a good 2000 square feet of roof and I added another 1000 square feet in for the driveway water and any other misc. water I was able to collect. This gave me a total of 3000 square feet of collection surface and I really think this is a best case scenario. For every 1000 square feet of collection surface, you can capture about 600 gallons of water for every 1" of rain. Given that NC averages 42 inches of rain per year, that gives me a maximum amount of rain water I could collect of 75,600 gallons per year.

It's important to understand a little about how the system works. I would have two 1700 gallon tanks giving me a total of 3400 total gallons I could store. Basically if you got a couple inches of rain over the course of a few days, you would fill those tanks up and then you would lose the rest to overflow. In order for me to actually collect the best case scenario of 75,600 gallons per year, the rain would have to be evenly spaced out between my irrigation cycles so that I didn't lose any to overflow and the rain would all need to come during the months that I have the irrigation system running. Obviously this is almost impossible and would most likely never happen but that's what would have to happen for me to be able to capture that 75,600 gallons each year and effectively use the water. It's also important to note that at full capacity of 3400 gallons, I don't have enough water to get through two complete irrigation cycles without having city water kick in.

For the purpose of this exercise, let's assume that I am able to capture the full capacity of 75,600 gallons and I'm able to use it. This would save me $558 per year based on my water rates and this really is a best case scenario.

The quote came in for the entire system and they want $11,200 for a turn key installation. The return on investment is over 20 years and this is assuming no further money is put into the system for maintenance during that 20 year period. It's true that water costs could go up but over time my watering needs should also go down due to my lawn becoming more established. At this point, I think I'll look for something with a much quicker ROI. On to solar power.......

To set the stage, I live in the Raleigh, NC area and I have a ten zone irrigation system for my lawn. The water bills rise from an average of $45 per month to an average of about $200 per month during the summer months when I'm having to irrigate two to three times per week. Because of the amount of money we're talking about, I decided to look into rain water collection systems that could be tied into my irrigation and help offset the water costs each month.

After investigating lots of options, I called up a local company called FreeFlo Water Recycling Systems. They had a system that included underground storage tanks, filtration systems, pumps that could generate about 24 gallons per minute, and optional tie-ins to my home water to service toilets, the dishwasher and the washing machine. It sounded great from the beginning and when they came to discuss my needs, they were very knowledgeable and had lots of ideas for what I would need. They left with all the information and said I would be getting a quote in a couple of days.

The day following that, I had my landscaping guy come over to discuss a couple of other projects I was planning. I shared with him what I was looking into and he asked me some questions about the system. When I told him that we were upgrading the basic package and installing two 1700 gallon tanks, he asked if I had an idea about how much water we used with each irrigation cycle. I had no idea so we worked a few minutes on some numbers to figure it out. With my ten zones and the various types of heads, we calculated roughly between 2200 and 2500 gallons of water each time I irrigated. The wheels started to turn.

The next step was to figure out how much I could possibly save in a given year. The guy from FreeFlo suggested I could cut down my water bill from about $200 during the summer months to about $35-$40 per month. He said he had some customers that had cut their bills from over $300 per month to less than $50 per month.

I started out by figuring how much collection surface I would have. I figured I probably have a good 2000 square feet of roof and I added another 1000 square feet in for the driveway water and any other misc. water I was able to collect. This gave me a total of 3000 square feet of collection surface and I really think this is a best case scenario. For every 1000 square feet of collection surface, you can capture about 600 gallons of water for every 1" of rain. Given that NC averages 42 inches of rain per year, that gives me a maximum amount of rain water I could collect of 75,600 gallons per year.

It's important to understand a little about how the system works. I would have two 1700 gallon tanks giving me a total of 3400 total gallons I could store. Basically if you got a couple inches of rain over the course of a few days, you would fill those tanks up and then you would lose the rest to overflow. In order for me to actually collect the best case scenario of 75,600 gallons per year, the rain would have to be evenly spaced out between my irrigation cycles so that I didn't lose any to overflow and the rain would all need to come during the months that I have the irrigation system running. Obviously this is almost impossible and would most likely never happen but that's what would have to happen for me to be able to capture that 75,600 gallons each year and effectively use the water. It's also important to note that at full capacity of 3400 gallons, I don't have enough water to get through two complete irrigation cycles without having city water kick in.

For the purpose of this exercise, let's assume that I am able to capture the full capacity of 75,600 gallons and I'm able to use it. This would save me $558 per year based on my water rates and this really is a best case scenario.

The quote came in for the entire system and they want $11,200 for a turn key installation. The return on investment is over 20 years and this is assuming no further money is put into the system for maintenance during that 20 year period. It's true that water costs could go up but over time my watering needs should also go down due to my lawn becoming more established. At this point, I think I'll look for something with a much quicker ROI. On to solar power.......

Thursday, April 9, 2009

Virtualization Can Leave Your Head Spinning In The Clouds

We're pushing forward full speed now with building our first cloud computing offering at Hosted Solutions. I'll have to admit that this is one of the most exciting projects I've worked on in my career. You hear a lot of talk about virtualization from a server and storage perspective but we're taking it all the way out to the switching infrastructure, the firewalls, and the load balancers. In other words, we're trying to virtualize what we offer as a business in the dedicated environment space. I can't go into too much detail yet because obviously much of this is intellectual property and we want it to be a surprise when we launch the offering.

What I can say is that I think you're about to see the level of complexity for computing skyrocket. There are certainly many advantages for virtualization and there are certainly risks. I won't go into all the risks that everyone else writes about. What I will talk about is the skill set required to support it.

We really started hammering away on the designs this week. We started with the virtualized firewalls (Juniper ISG 2000s) and worked down to the switching layer (Cisco Nexus). When going through each layer, you have to fasten your seat belt and pay close attention because if you don't you'll get lost in the cloud.....literally.

You have physical devices connected to physical cabling using physical interfaces. That's what the Network Engineer in the past had to be an expert in. You had to know how to route traffic from one physical device to another physical device through physical interfaces over physical medium. This of course is an over-simplified explanation but bear with me for a minute.

Now, in addition to all of that you have complete infrastructures built once you get inside of those physical devices. Take the firewall example. Within a single physical device now, you're talking virtual interfaces, virtual routers and virtual firewalls. You could literally go from one customer's virtual firewall to their virtual routing environment through their virtual interfaces into another customer's routing environment and then through their virtual firewall....all within the same physical device. And all of this before you talk about the virtual load balancing and virtual switching environments. Trust me, you can get lost.

We've got some very talented and experienced Network Engineers on my team. We've probably got close to 90 years of combined networking experience among my team and there were several times this week where we really did lose the traffic as it worked it's way through the design. Needless to say, this was just on paper.

I say all this to say that as we march towards the world of virtualization and tout all of the many business and technological benefits, people have to understand this is not for the faint of heart and you will have to re hone your skills to survive. It will take a considerable investment of your personal time if you are going to be successful in the network of the future. This isn't simply adding a new model router or switch to your environment. It's a new paradigm and it will be challenging. Those that take that challenge will be rewarded for it.

The barriers to entry for new Network Engineers will be great because not only will they need to learn everything we already know about the physical networking world, they will have to learn the virtual world too. This will undoubtedly increase the value of Network Engineers (read "I'm glad I am one") because it will take much longer for people to get up to speed in the networking arena. Should be a fun ride. I can tell you that the the technology itself is fun but you must have an extremely strong foundation to understand it. Otherwise, you will get lost in the clouds and never find your way out!

What I can say is that I think you're about to see the level of complexity for computing skyrocket. There are certainly many advantages for virtualization and there are certainly risks. I won't go into all the risks that everyone else writes about. What I will talk about is the skill set required to support it.

We really started hammering away on the designs this week. We started with the virtualized firewalls (Juniper ISG 2000s) and worked down to the switching layer (Cisco Nexus). When going through each layer, you have to fasten your seat belt and pay close attention because if you don't you'll get lost in the cloud.....literally.

You have physical devices connected to physical cabling using physical interfaces. That's what the Network Engineer in the past had to be an expert in. You had to know how to route traffic from one physical device to another physical device through physical interfaces over physical medium. This of course is an over-simplified explanation but bear with me for a minute.

Now, in addition to all of that you have complete infrastructures built once you get inside of those physical devices. Take the firewall example. Within a single physical device now, you're talking virtual interfaces, virtual routers and virtual firewalls. You could literally go from one customer's virtual firewall to their virtual routing environment through their virtual interfaces into another customer's routing environment and then through their virtual firewall....all within the same physical device. And all of this before you talk about the virtual load balancing and virtual switching environments. Trust me, you can get lost.

We've got some very talented and experienced Network Engineers on my team. We've probably got close to 90 years of combined networking experience among my team and there were several times this week where we really did lose the traffic as it worked it's way through the design. Needless to say, this was just on paper.

I say all this to say that as we march towards the world of virtualization and tout all of the many business and technological benefits, people have to understand this is not for the faint of heart and you will have to re hone your skills to survive. It will take a considerable investment of your personal time if you are going to be successful in the network of the future. This isn't simply adding a new model router or switch to your environment. It's a new paradigm and it will be challenging. Those that take that challenge will be rewarded for it.

The barriers to entry for new Network Engineers will be great because not only will they need to learn everything we already know about the physical networking world, they will have to learn the virtual world too. This will undoubtedly increase the value of Network Engineers (read "I'm glad I am one") because it will take much longer for people to get up to speed in the networking arena. Should be a fun ride. I can tell you that the the technology itself is fun but you must have an extremely strong foundation to understand it. Otherwise, you will get lost in the clouds and never find your way out!

Tuesday, March 10, 2009

Virtualized Firewall Decision Made

I know it's been some time since my last update but that's not because nothing has been going on. It's because so much has been going on which I guess is a very good thing these days! I'm happy to report that all of our research has been completed into the virtualized firewall offerings and we have made our decision.

We started off with Check Point and looked at the Power-1 VSX platform. I wrote about that in the previous blog entry. Since that time, we completed overview presentations with both Cisco and Juniper. We then put together a great comparison matrix which analyzed features and price.

First, let me discuss the Cisco offering. I'm a big fan of Cisco for what Cisco is very good at. However, they've struggled with security over the years and the recent research on virtualized firewall offerings indicated that they're still struggling. They are much better and I do believe ACE is a great improvement over PIX but I still think there is a long ways to go. The management solution for the virtualized offering seemed to be tied up in a mix between their local GUI management interface, a central manager and their MARS platform. It wasn't unified which is something we've been after from the beginning. If you're planning for large growth and need scalability, you need unification and ease of management. Cisco isn't there. They also say that you shouldn't terminate your IPSEC tunnels onto each virtual system and when you're a managed hosting provider, this just doesn't work. Again, scalability and unification were key and we felt Cisco fell short.

Next, we looked at Juniper. Juniper has been running the virtual system technology for a number of years and you could tell that the product had been baked. The main findings for Juniper were:

In summary, Juniper won on this one. We did feel that Check Point had some added features that none of the others did but when you consider the high cost, you can't beat Juniper. I am also happy to report that the Juniper solution is going to be used to protect the cloud computing environment we are building. That's a very exciting project we're working on and I'll cover more on that later as we finalize the solution in the next two weeks.

We started off with Check Point and looked at the Power-1 VSX platform. I wrote about that in the previous blog entry. Since that time, we completed overview presentations with both Cisco and Juniper. We then put together a great comparison matrix which analyzed features and price.

First, let me discuss the Cisco offering. I'm a big fan of Cisco for what Cisco is very good at. However, they've struggled with security over the years and the recent research on virtualized firewall offerings indicated that they're still struggling. They are much better and I do believe ACE is a great improvement over PIX but I still think there is a long ways to go. The management solution for the virtualized offering seemed to be tied up in a mix between their local GUI management interface, a central manager and their MARS platform. It wasn't unified which is something we've been after from the beginning. If you're planning for large growth and need scalability, you need unification and ease of management. Cisco isn't there. They also say that you shouldn't terminate your IPSEC tunnels onto each virtual system and when you're a managed hosting provider, this just doesn't work. Again, scalability and unification were key and we felt Cisco fell short.

Next, we looked at Juniper. Juniper has been running the virtual system technology for a number of years and you could tell that the product had been baked. The main findings for Juniper were:

- Multiple options for reserving resources per virtual system

- Very good performance numbers (which they all had at this level)

- The ability to terminate VPN tunnels per virtual system

- The virtual firewalls are managed through the same central manager as the dedicated firewalls

- The total cost of ownership was very attractive (comparable to Cisco, half the cost of Check Point)

- Backing of industry analysts such as Gartner which means a lot when you're a managed service provider trying to prove to your customers that you've made solid choices in the platforms you offer

In summary, Juniper won on this one. We did feel that Check Point had some added features that none of the others did but when you consider the high cost, you can't beat Juniper. I am also happy to report that the Juniper solution is going to be used to protect the cloud computing environment we are building. That's a very exciting project we're working on and I'll cover more on that later as we finalize the solution in the next two weeks.

Wednesday, February 4, 2009

Check Point VPN-1 Power VSX - Virtualized Firewalls

After doing a lot of preliminary research and brainstorming, I finally embarked on the quest to introduce a virtualized firewall product offering at Hosted Solutions this week. As we are pushing more and more towards trying to virtualize everything, the virtual firewall platform is the most obvious choice to start in the areas I am responsible for.

The concept is fairly simple for those familiar with any type of virtualization. We would deploy a cluster of highly available firewall appliances. This highly available cluster would allow us to carve up it's resources and partition virtual firewalls on the cluster. In other words, we deploy two appliances in a cluster and build up to 250 virtual firewalls on that one cluster. Each virtual firewall would be dedicated to a particular customer and it would look, smell and act like a dedicated firewall. It could have it's own management interface and certainly would have it's own security boundaries.

At this stage, we are simply conducting research on the various platforms available that seem to fit the bill. Check Point was the obvious choice to start looking at first because they are the incumbent provider of the firewalls we sell and manage for many of our customers.

Check Point's solution for virtualized firewalls is the VPN-1 Power VSX. This platform can support up to 250 virtual systems from a single cluster which is pretty amazing. Check Point presented the product to us today and here are a few key notes I took away:

The concept is fairly simple for those familiar with any type of virtualization. We would deploy a cluster of highly available firewall appliances. This highly available cluster would allow us to carve up it's resources and partition virtual firewalls on the cluster. In other words, we deploy two appliances in a cluster and build up to 250 virtual firewalls on that one cluster. Each virtual firewall would be dedicated to a particular customer and it would look, smell and act like a dedicated firewall. It could have it's own management interface and certainly would have it's own security boundaries.

At this stage, we are simply conducting research on the various platforms available that seem to fit the bill. Check Point was the obvious choice to start looking at first because they are the incumbent provider of the firewalls we sell and manage for many of our customers.

Check Point's solution for virtualized firewalls is the VPN-1 Power VSX. This platform can support up to 250 virtual systems from a single cluster which is pretty amazing. Check Point presented the product to us today and here are a few key notes I took away:

- Capable of running up to 250 virtual systems on a single cluster

- Each virtual system has it's own routing and switching domain and appears in SmartDashboard to be a unique firewall

- Supports BGP and OSPF routing (not sure if you have to upgrade to SPLAT pro as you did with the non-virtualized VPN-1 platform)

- Each virtual system can have weighted resources to control how much of those resources a single customer can consume. As we dug into this more, we found out that CPU consumption is really the only resource that can be controlled this way.

- If you have a three node cluster, you can actually do some pretty amazing things in terms of high availability. Check Point introduces the concept of not only a primary firewall and standby firewall, but now there can be a backup firewall on the third node. This is a neat feature especially for high availability environments like the ones we support. In this configuration if a primary firewall fails, the standby takes over duties. The backup firewall is then promoted as the new standby. When the failed node comes back online, it becomes the new backup. This ensures that there is always a primary and standby so you could really go after a 100% SLA with this type of product. Smart thinking.

- The performance data scales linearly as you add additional nodes to the cluster (up to 6). There were some pretty good performance stats being shown where Power VSX was running on SecurePlatform on two Dell 2950 servers.

Monday, January 12, 2009

Data Center Ethernet: A Solution For Unified Fabric

There's a lot of buzz these days in the network world around the "unified fabric". To put it simply, unified fabric refers to collapsing all the various connectivity mechanisms/technologies onto a single transport medium. Traditionally in large data center environments, you may have the following different segments connecting various technologies and/or serving various functional purposes:

It would surely make life much simpler from an administrative standpoint and there are several obvious cost advantages by making this a reality (fewer NICs, HBAs, cabling, fiber, switch types, etc.). Cisco's Data Center Ethernet promises to help do just that.

Ethernet is already the predominant choice for connecting network resources in the data center. It's ubiquitous, understood by engineers/developers around the globe and has stood the test of time against several challenger technologies. Cisco has basically built upon what we know as Ethernet. Cisco's Data Center Ethernet is basically a collection of Ethernet design technologies designed to improve and expand the role of Ethernet networking in the data center. These technologies include:

- Front End Network. This is basically the primary public facing network and the one connecting to the Internet or large corporate network.

- Storage Network. This is the network connecting all the servers to the various storage arrays. You'll primarily see fiber channel technology being used and specialized SAN fabric switches used for connectivity.

- Back End Network. This is generally the network connecting all the servers to the various backup solutions providing backup/restore services to the environment.

- Management Network. This is a back end network of sorts but has a primary focus of providing management services to the environment. A management network typically provides the transport used by the management entity or NOC to gain remote access to the environment.

- InfiniBand Network. This network uses InfiniBand technology for high performance clustered computing.

It would surely make life much simpler from an administrative standpoint and there are several obvious cost advantages by making this a reality (fewer NICs, HBAs, cabling, fiber, switch types, etc.). Cisco's Data Center Ethernet promises to help do just that.

Ethernet is already the predominant choice for connecting network resources in the data center. It's ubiquitous, understood by engineers/developers around the globe and has stood the test of time against several challenger technologies. Cisco has basically built upon what we know as Ethernet. Cisco's Data Center Ethernet is basically a collection of Ethernet design technologies designed to improve and expand the role of Ethernet networking in the data center. These technologies include:

- Priority-based Flow Control (PFC). PFC is an enhancement to the pause mechanism within Ethernet. The current Ethernet pause option stops all the traffic on the entire link to control traffic flow. PFC creates eight virtual links on each physical link and allows any of these links to be paused independent of the others. When I was at Cisco a few weeks ago we covered this in detail. As a joke I asked them if they had 8 channels because Ethernet cabling has 8 separate wires (I know, networking jokes are quite complicated). They laughed and said that was in fact where the idea came from. They originally intended to send each virtual link down a different physical wire. It was funny for all of us anyway.

- Enhanced Transmission Selection. PFC can create eight distinct virtual link types on a physical link, and it can be advantageous to have different traffic classes defined within each virtual link. Traffic within the same PFC class can be grouped together and yet treated differently within each group. Enhanced Transmission Selection (ETS) provides prioritized processing based on bandwidth allocation, low latency, or best effort, resulting in per-group traffic class allocation.

- Data Center Bridging Exchange Protocol (DCBX). Data Center Bridging Exchange (DCBX) Protocol is a discovery and capability exchange protocol that is used by Cisco DCE products to discover peers in Cisco Data Center Ethernet networks and exchange configuration information between Cisco DCE switches.

- Congestion Notification. Traffic management that pushes congestion to the edge of the network by instructing rate limiters to shape the traffic causing the congestion.

Labels:

Cisco,

Data Center,

Data Center Ethernet,

Unified Fabric

Monday, January 5, 2009

Cisco's Virtual Switching System (VSS)

I first learned about Cisco's Virtual Switching System (VSS) back at the 2008 Cisco Networker's conference in Orlando. Now that it's officially in production and there are several successful case studies, I figured it was the appropriate time to write about it.

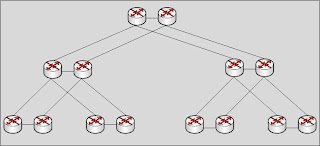

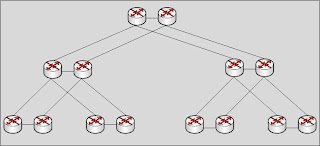

VSS brings a much greater depth of virtualization to the switching layer and actually helps solve many of the challenges Network Engineers face when building out large switching environments. To put it in a nutshell, VSS allows an engineer to take two Cisco Catalyst 6500 series switching platforms and "virtualize" or collapse them so they appear to be one switch. Here is a basic diagram for how your layer two environment would normally appear for a highly redundant data center switching architecture:

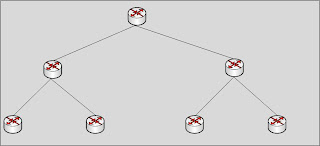

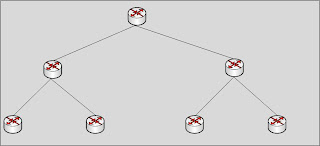

VSS basically allows you to collapse each of these switch pairs into a virtual switch so your new high level architecture would look like this:

From an engineering standpoint, this has several advantages and the new VSS technology combined with other technologies allows us to:

From an engineering standpoint, this has several advantages and the new VSS technology combined with other technologies allows us to:

1) Cut down on the number of ports and links that are serving in a passive only capacity. In the past, one set of the redundant links were automatically implemented in a passive state thanks to Spanning Tree Protocol (STP). STP was originally designed to help prevent loops in the network but is now commonly implemented as a redundancy mechanism. By using VSS and another technology called Multichassis Etherchannel, you can basically get to a state where all links are active and passing traffic. This increases capacity, allows you to use ports and links previously unusable unless a failover occurred and allows you to cut down on the number of ports. Active/passive architectures only use 50% of available capacity, adding considerable expense to the project.

2) Manage fewer network elements. With VSS we get one control plane for each VSS cluster so these appear as one switch. One switch to manage instead of two has it's obvious benefits.

3) Use all NICs on the servers in the infrastructure. As with the combined links between the switches, you can also have combined links for the servers using the same technology. Multichassis Etherchannel (MEC) allows you to connect a server to two physically seperate switches and use both connections for an active/active implementation. This is a pretty big step in that we immediately double the capacity of our servers with the same number of NICs while still providing the highest level of redundancy.

4) Build bigger backbones. The performance of a VSS cluster is almost exactly twice that of one standalone 6500 platform. You might think this would be obvious being that you're using two switches so of course performance should be twice as much. But the fact that Cisco has managed to pull it off across two physically seperate chassis that appear as one logical switch is pretty amazing...at least from this engineer's perspective.

I think that's a good summary but this is some exciting stuff and will allow all of us engineers who have had our share of STP issues over the years some hope for the future!

VSS brings a much greater depth of virtualization to the switching layer and actually helps solve many of the challenges Network Engineers face when building out large switching environments. To put it in a nutshell, VSS allows an engineer to take two Cisco Catalyst 6500 series switching platforms and "virtualize" or collapse them so they appear to be one switch. Here is a basic diagram for how your layer two environment would normally appear for a highly redundant data center switching architecture:

VSS basically allows you to collapse each of these switch pairs into a virtual switch so your new high level architecture would look like this:

From an engineering standpoint, this has several advantages and the new VSS technology combined with other technologies allows us to:

From an engineering standpoint, this has several advantages and the new VSS technology combined with other technologies allows us to:1) Cut down on the number of ports and links that are serving in a passive only capacity. In the past, one set of the redundant links were automatically implemented in a passive state thanks to Spanning Tree Protocol (STP). STP was originally designed to help prevent loops in the network but is now commonly implemented as a redundancy mechanism. By using VSS and another technology called Multichassis Etherchannel, you can basically get to a state where all links are active and passing traffic. This increases capacity, allows you to use ports and links previously unusable unless a failover occurred and allows you to cut down on the number of ports. Active/passive architectures only use 50% of available capacity, adding considerable expense to the project.

2) Manage fewer network elements. With VSS we get one control plane for each VSS cluster so these appear as one switch. One switch to manage instead of two has it's obvious benefits.

3) Use all NICs on the servers in the infrastructure. As with the combined links between the switches, you can also have combined links for the servers using the same technology. Multichassis Etherchannel (MEC) allows you to connect a server to two physically seperate switches and use both connections for an active/active implementation. This is a pretty big step in that we immediately double the capacity of our servers with the same number of NICs while still providing the highest level of redundancy.

4) Build bigger backbones. The performance of a VSS cluster is almost exactly twice that of one standalone 6500 platform. You might think this would be obvious being that you're using two switches so of course performance should be twice as much. But the fact that Cisco has managed to pull it off across two physically seperate chassis that appear as one logical switch is pretty amazing...at least from this engineer's perspective.

I think that's a good summary but this is some exciting stuff and will allow all of us engineers who have had our share of STP issues over the years some hope for the future!

Subscribe to:

Comments (Atom)